- DomainLab addresses the challenge of poor generalization in deep learning models.

- It offers a modular Python package for domain generalization.

- The package disentangles components for seamless integration of diverse techniques.

- Users can specify multiple techniques and hyperparameters within a single configuration file.

- DomainLab promotes experimentation and reproducibility.

- Robust benchmarking capabilities enable evaluation of generalization performance.

- With over 95% test coverage, it ensures dependable results.

- Extensive documentation facilitates ease of use and customization.

- Users retain the flexibility to modify and extend the package as needed.

Main AI News:

Amidst the burgeoning popularity of deep learning models within the Artificial Intelligence community, a persistent challenge looms large: the issue of poor generalization. Even as these models showcase immense capacity, their performance often falters when faced with data that deviates from their training set. Such disparities in data distribution between training and testing phases significantly hamper model efficacy.

Enter domain generalization—a groundbreaking approach devised by researchers to address this very challenge. The concept involves crafting models capable of operating effectively across diverse data distributions. Yet, despite its potential, the field has grappled with the lack of robust tools for constructing and evaluating domain generalization techniques. Existing implementations, often more akin to proof-of-concept code, lack the modularity necessary for seamless experimentation across varied datasets.

In response to these pressing needs, a pioneering team of researchers has unveiled DomainLab, a modular Python package engineered specifically for domain generalization in deep learning. DomainLab represents a paradigm shift by disentangling the components of domain generalization, empowering users to integrate diverse algorithmic elements seamlessly. This modular approach not only enhances adaptability but also simplifies the process of tailoring techniques to specific use cases.

At its core, DomainLab stands out for its decoupled architecture, which meticulously separates regularisation loss construction from neural network development. This design philosophy affords users unparalleled flexibility in specifying multiple domain generalization techniques, hierarchical neural network combinations, and associated hyperparameters—all within a single, intuitive configuration file.

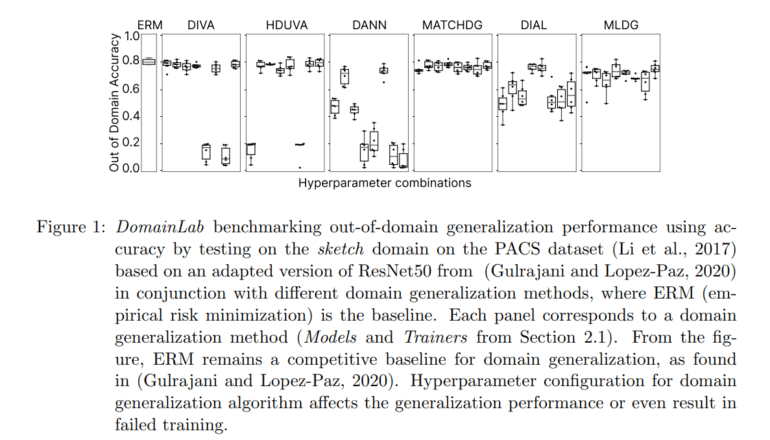

Crucially, DomainLab champions experimentation and reproducibility. Users can effortlessly tweak individual model components, fostering a culture of innovation while ensuring repeatability. Moreover, the package boasts robust benchmarking capabilities, enabling users to evaluate neural network generalization performance on out-of-distribution data. Whether deployed on a standalone system or a cluster of high-performance computers, DomainLab delivers dependable results.

Notably, DomainLab prioritizes both dependability and usability. Rigorous testing, covering over 95% of use cases, instills confidence in the package’s performance across diverse scenarios. Complemented by comprehensive documentation elucidating its myriad features, DomainLab empowers users to harness its full potential with ease.

From a user perspective, DomainLab embodies the ethos of being ‘closed to modification but open to extension.’ While its core features remain robust and well-defined, users retain the freedom to tailor the package to their unique requirements through seamless customization. Moreover, the package’s distribution under the permissive MIT license underscores its commitment to fostering collaboration and innovation within the deep learning community.

Conclusion:

The introduction of DomainLab marks a significant advancement in the field of domain generalization, offering a comprehensive solution to the perennial challenge of poor model performance stemming from data distribution disparities. Its modular design and robust features not only enhance adaptability and experimentation but also streamline the process of evaluating and deploying deep learning models. This innovation is poised to catalyze further growth and innovation within the deep learning market, empowering researchers and practitioners to unlock new frontiers in AI development.