- Introduction of HETAL, an innovative privacy-preserving transfer learning algorithm leveraging homomorphic encryption.

- HETAL ensures data privacy by encrypting data features for fine-tuning on a server without compromising confidentiality.

- The core of HETAL lies in optimized encrypted matrix multiplications and novel softmax approximation algorithms.

- Extensive experimentation demonstrates HETAL’s effectiveness across benchmark datasets with minimal accuracy loss and practical runtimes.

- This signifies a significant advancement in privacy-preserving machine learning, offering efficient solutions for data privacy concerns.

Main AI News:

In today’s data-driven landscape, safeguarding privacy remains paramount. Legislative frameworks such as the EU’s General Data Protection Regulation (GDPR) underscore the imperative of protecting personal data. However, in the realm of machine learning, the challenge of preserving privacy becomes even more complex when clients seek to employ pre-trained models with their own data. The conventional method of sharing extracted data features with model providers poses a significant risk of exposing sensitive client information to potential attacks, such as feature inversion.

Traditionally, privacy-preserving transfer learning has relied on methodologies like secure multi-party computation (SMPC), differential privacy (DP), and homomorphic encryption (HE). While SMPC entails considerable communication overhead and DP may compromise accuracy, HE-based approaches have shown promise despite facing computational hurdles.

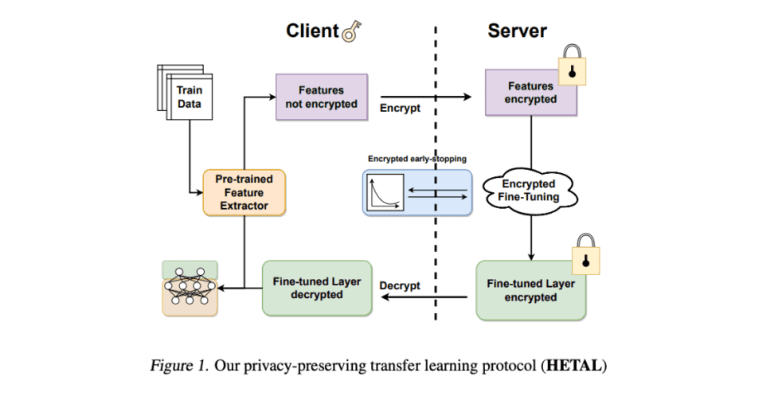

Enter HETAL – a cutting-edge solution developed by a team of researchers. HETAL leverages homomorphic encryption to facilitate seamlessly privacy-preserving transfer learning. This innovative algorithm, depicted in Figure 1, empowers clients to encrypt data features and transmit them to a server for fine-tuning, all while upholding stringent data privacy standards.

At the heart of HETAL lies a meticulously optimized process for encrypted matrix multiplications – a pivotal operation in neural network training. The researchers introduce groundbreaking algorithms, DiagABT and DiagATB, which substantially mitigate computational overhead compared to prior methods. Moreover, HETAL pioneers a novel approximation algorithm for the softmax function, a critical element in neural networks. Unlike conventional approaches constrained by limited approximation ranges, HETAL’s algorithm accommodates input values across exponentially vast intervals, thereby facilitating precise training over numerous epochs.

The efficacy of HETAL is underscored by extensive experimentation across five benchmark datasets, including MNIST, CIFAR-10, and DermaMNIST, as detailed in Table 1. Impressively, the encrypted models yielded accuracy levels within a mere 0.51% variance from their unencrypted counterparts, all while boasting practical runtimes often under an hour.

Conclusion:

The introduction of HETAL marks a paradigm shift in privacy-preserving machine learning. Its ability to seamlessly integrate homomorphic encryption into transfer learning processes while maintaining data privacy underscores its potential to reshape the market landscape. Businesses operating in data-sensitive domains can now leverage advanced algorithms like HETAL to mitigate privacy risks and unlock new avenues for innovation in machine learning applications.