TL;DR:

- Introduction of ChatQA, a family of conversational QA models by NVIDIA.

- These models aim to surpass GPT-4 in accuracy and redefine conversational interactions.

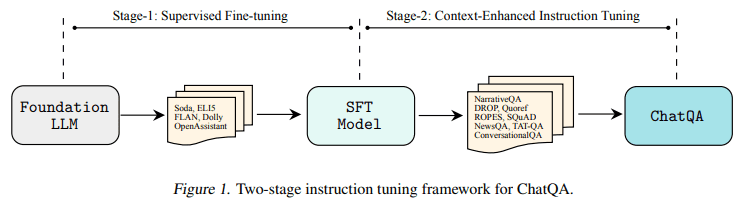

- ChatQA employs a two-stage instruction tuning method for enhanced zero-shot QA.

- It outperforms GPT-4 in average scores across ten conversational QA datasets.

- The methodology involves supervised fine-tuning and context-enhanced instruction tuning.

Main AI News:

In the realm of conversational question-answering (QA), a significant breakthrough has emerged. The arrival of robust large language models (LLMs) like GPT-4 has fundamentally transformed how we engage in conversations and generate responses on the fly. These LLMs have reshaped the landscape, fostering more user-friendly and intuitive interactions while pushing the boundaries of accuracy in automated responses, all without the need for dataset-specific fine-tuning.

Addressing the core challenge of enhancing zero-shot conversational QA accuracy within LLMs has been a primary focus. Previous attempts, though somewhat effective, have not fully harnessed the potential inherent in these formidable models. The research endeavor aims to refine these methods, striving for greater precision and setting new benchmarks in the domain of conversational QA.

Current approaches to conversational QA predominantly involve fine-tuning single-turn query retrievers on multi-turn QA datasets. While these techniques exhibit efficacy to a certain extent, there exists room for improvement, particularly in real-world applications. This research introduces an innovative approach poised to tackle these limitations head-on and propel the capabilities of conversational QA models to new heights.

Enter ChatQA, a groundbreaking family of conversational QA models, spearheaded by researchers at NVIDIA, designed to not only meet but exceed the accuracy levels set by GPT-4. ChatQA leverages a novel two-stage instruction tuning methodology, which brings about a significant enhancement in zero-shot conversational QA outcomes derived from LLMs. This method represents a substantial breakthrough, elevating the performance of existing conversational models to unprecedented levels.

The methodology underpinning ChatQA is both intricate and innovative. The first stage encompasses supervised fine-tuning (SFT) on a diverse array of datasets, laying the groundwork for the model’s proficiency in following instructions. The second stage, known as context-enhanced instruction tuning, seamlessly integrates contextualized QA datasets into the instruction tuning process. This dual-pronged approach ensures that the model excels not only in following instructions effectively but also in producing context-aware and retrieval-augmented responses in conversational QA scenarios.

Among the variants within the ChatQA family, ChatQA-70B stands out as it surpasses GPT-4 in average scores across ten conversational QA datasets, a remarkable achievement accomplished without reliance on synthetic data from existing ChatGPT models. This outstanding performance serves as a testament to the effectiveness of the two-stage instruction tuning method employed by ChatQA.

Conclusion:

The introduction of ChatQA by NVIDIA signifies a significant advancement in the conversational QA market. These models not only aim to surpass the accuracy of GPT-4 but also promise to redefine user-friendly and precise interactions. With a two-stage instruction tuning method and outstanding performance across datasets, ChatQA is poised to reshape the market by offering more accurate and context-aware conversational QA solutions.