TL;DR:

- Dataiku unveils the LLM Mesh at the Everyday AI Conference in New York.

- LLM Mesh addresses challenges in integrating Large Language Models (LLMs) in enterprises.

- Launch partners include Snowflake, Pinecone, and AI21 Labs.

- Challenges in Generative AI adoption: centralized administration, data control, toxic content, and cost monitoring.

- LLM Mesh offers universal AI service routing, secure access, data screening, and cost tracking.

- Dataiku’s LLM Mesh features are to be released in public and private previews in October.

- The CTO of Dataiku, Clément Stenac, highlights the potential of LLM Mesh in bridging the gap between AI promise and reality.

Main AI News:

Dataiku, a trailblazing force in the world of AI innovation, unveiled its groundbreaking LLM Mesh at the prestigious Everyday AI Conference in New York. This unveiling marks a pivotal moment in the realm of Large Language Models (LLMs), addressing the critical need for a robust, scalable, and secure platform designed explicitly for the seamless integration of LLMs within the enterprise landscape. In a strategic move, Dataiku is proud to collaborate with industry giants Snowflake, Pinecone, and AI21 Labs as its esteemed LLM Mesh Launch Partners.

Navigating the Challenges of Generative AI in Enterprises

The transformative potential of Generative AI in enterprise applications is undeniable. However, organizations have grappled with significant challenges in harnessing this technology to its fullest extent. Among these challenges are the absence of centralized administration, insufficient controls over data and model permissions, limited safeguards against toxic content, the utilization of personally identifiable information, and the absence of effective cost-monitoring mechanisms. Furthermore, many enterprises are in need of guidance to establish best practices for fully capitalizing on this burgeoning technological ecosystem.

LLM Mesh: Unifying Gen AI Applications

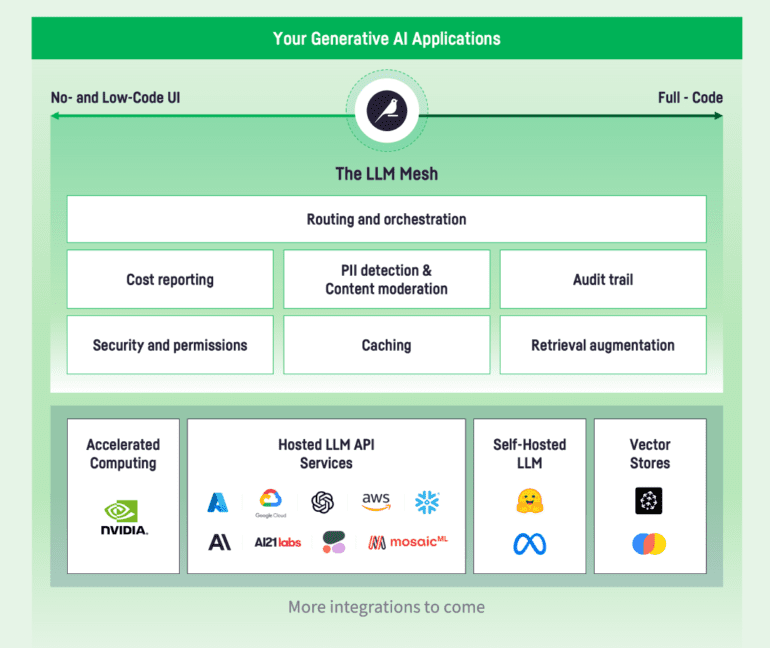

Building upon the robust Generative AI capabilities introduced by Dataiku in June 2023, the LLM Mesh emerges as the beacon of hope to overcome these impediments. Serving as the common backbone for Gen AI applications, the LLM Mesh equips companies with the essential components required to build secure and scalable applications utilizing LLMs efficiently. By positioning the LLM Mesh between LLM service providers and end-user applications, enterprises gain the flexibility to select the most cost-effective models for their current and future needs. This strategic placement also ensures the safeguarding of data integrity and responses while facilitating the creation of reusable components for scalable application development.

The components of the LLM Mesh encompass universal AI service routing, robust access controls, meticulous auditing for AI services, provisions for private data screening, response moderation, and comprehensive performance and cost tracking. Moreover, the LLM Mesh provides standardized components for application development, guaranteeing quality, consistency, and the level of control and performance that businesses demand.

Unlocking Enterprise-Grade Generative AI Applications with the LLM Mesh

Dataiku’s innovative features powering the LLM Mesh are set to be unveiled in both public and private previews, commencing this October. Clément Stenac, Chief Technology Officer and co-founder at Dataiku, expresses his conviction, stating, “The LLM Mesh represents a pivotal step in AI. At Dataiku, we’re bridging the gap between the promise and reality of using Generative AI in the enterprise. We believe the LLM Mesh provides the structure and control many have sought, paving the way for safer, faster GenAI deployments that deliver real value.”

The Unveiling of Dataiku’s LLM Mesh Launch Partners

Dataiku is committed to fostering the effective and extensive utilization of LLMs, vector databases, and diverse compute infrastructures within the enterprise landscape. Rather than duplicating existing capabilities, Dataiku aims to enhance and democratize access to these technologies. It is with great pride that Dataiku announces its LLM Mesh Launch Partners: Snowflake, Pinecone, and AI21 Labs. These esteemed partners represent key components of the LLM Mesh, including containerized data and compute capabilities, vector databases, and LLM builders.

Torsten Grabs, Senior Director of Product Management at Snowflake, emphasizes the significance of this collaboration, stating, “We are excited about the vision of the LLM Mesh as we know the true value is not just getting LLM-powered applications to production — it’s about democratizing AI in a safe and secure manner.”

Chuck Fontana, VP of Business Development at Pinecone, adds, “LLM Mesh is more than an architecture — it’s a pathway. Together, Dataiku and Pinecone are setting a new standard, providing a way that others in the industry can align with, helping to overcome barriers the market faces in building enterprise-grade GenAI applications at scale.”

Pankaj Dugar, SVP and GM, North America at AI21 Labs, underscores the importance of collaboration and diversity in driving innovation, stating, “In today’s evolving technological landscape, it’s paramount that we foster a diverse, tightly integrated ecosystem within the Generative AI stack for the benefit of our customers.”

Conclusion:

Dataiku’s introduction of the LLM Mesh, coupled with strategic partnerships with industry giants, signifies a transformative shift in the enterprise AI landscape. By addressing critical challenges and providing a unified platform for scalable Generative AI applications, this development is poised to democratize AI, ushering in a new era of safer and more efficient AI deployments. This innovative initiative by Dataiku has the potential to reshape the market by accelerating the adoption of Generative AI and unlocking boundless possibilities for businesses across industries.