- DeepMind and Anthropic researchers introduce “Equal-Info Windows” for LLM training on compressed text.

- The method achieves higher compression rates while maintaining LLM performance.

- Utilizes dual-model system: a smaller model for compression (M1) and larger LLM trained on compressed output (M2).

- Segments text into uniform blocks for compression and tokenization.

- Utilizes C4 dataset for training, ensuring consistent compression rates and stable inputs for LLM.

- Results show significant improvements in perplexity scores and inference speeds.

- Models trained with “Equal-Info Windows” outperform traditional methods by up to 30% in perplexity benchmarks.

- Accelerates inference speed by up to 40% compared to conventional training setups.

Main AI News:

The journey to maximize the potential of Large Language Models (LLMs) has been hindered by the constraints of subword tokenization, a method effective to an extent, yet demanding extensive computational resources. This limitation not only curbs the scalability of models but also constrains training on extensive datasets without incurring exorbitant expenses. The dual challenge has been clear: how to drastically condense text to facilitate efficient model training while maintaining or enhancing model performance.

Recent research has explored various avenues, including the utilization of transformer language models like the Chinchilla model, which exhibits significant text size reduction capabilities. Innovations in Arithmetic Coding, tailored for enhanced LLM compatibility, and the investigation of “token-free” language modeling through convolutional downsampling present alternative routes for neural tokenization. Additionally, leveraging learned tokenizers in audio compression and integrating GZip’s modeling components for diverse AI tasks extend the applicability of compression algorithms. Studies incorporating static Huffman coding with n-gram models offer a simpler approach, prioritizing ease of implementation over maximal compression efficiency.

In a groundbreaking development, Google DeepMind and Anthropic researchers have introduced a pioneering method for LLM training on neurally compressed text, dubbed ‘Equal-Info Windows.’ This innovative approach achieves significantly higher compression rates compared to conventional methods without compromising the learnability or performance of LLMs. The crux of this methodology lies in handling highly compressed text while preserving efficiency and efficacy in model training and inference tasks.

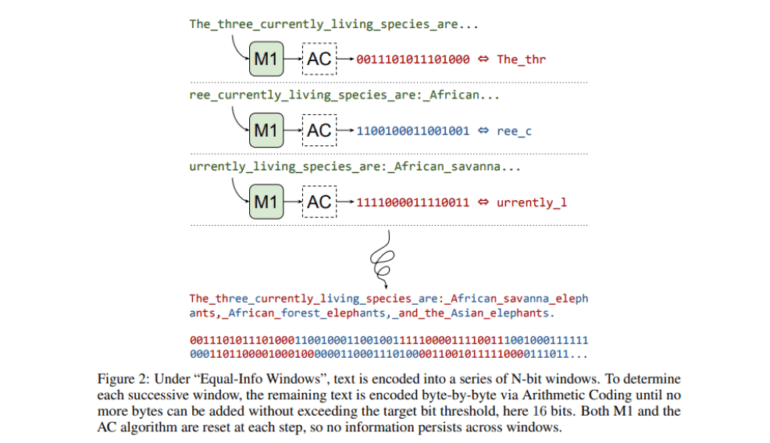

The technique employs a dual-model framework: M1, a smaller language model tasked with text compression using Arithmetic Coding, and M2, a larger LLM trained on the compressed output. Text is segmented into uniform blocks, each compressing to a specific bit length, and then tokenized for M2 training. The researchers utilize the C4 (Cleaned Common Crawl Corpus) dataset for model training, aiming to sustain efficiency and effectiveness in model performance across extensive datasets by ensuring consistent compression rates and providing stable inputs for the LLM.

Results demonstrate that models trained using “Equal-Info Windows” surpass traditional methods significantly. LLMs employing this technique exhibit remarkable improvements in perplexity scores and inference speeds. Notably, models trained with “Equal-Info Windows” on perplexity benchmarks outperform byte-level baselines by up to 30%, showcasing a substantial enhancement in efficiency. Moreover, there is a noticeable acceleration in inference speed, with models showcasing up to a 40% increase in processing speed compared to conventional training setups. These metrics underscore the efficacy of the proposed method in augmenting the efficiency and performance of large language models trained on compressed text.

Conclusion:

The introduction of “Equal-Info Windows” represents a significant advancement in AI compression techniques for LLM training. This innovation not only addresses the challenge of efficiently compressing text but also enhances the performance of large language models. For businesses operating in AI and natural language processing sectors, adopting such techniques can lead to more efficient model training, improved performance, and, ultimately, a competitive edge in the market.