TL;DR:

- “Distilling Step-by-Step” technique developed by Google and the University of Washington enhances the training of small, specialized machine learning models.

- It extracts informative natural language rationales from large language models (LLMs).

- Rationales augment training, reducing data requirements and enabling the use of smaller models.

- Empirical trials show substantial performance gains with reduced data sizes.

- Smaller models outperform larger ones, offering efficiency gains in various NLP tasks.

- This innovation democratizes advanced language models for wider applications.

Main AI News:

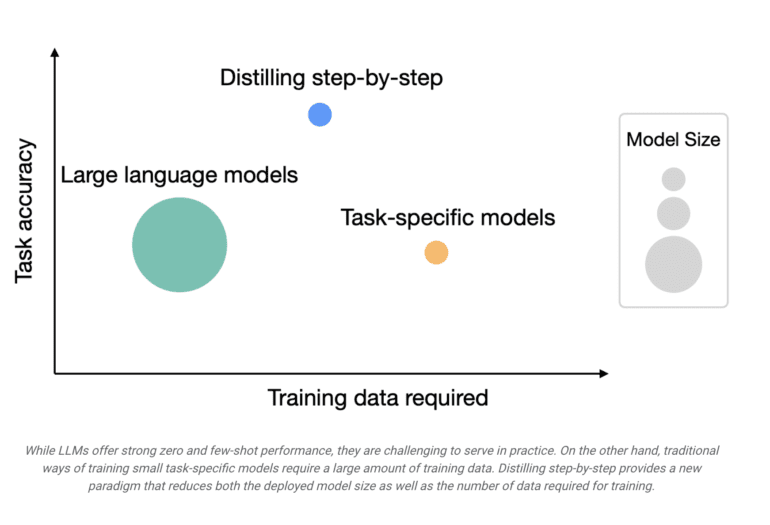

In the ever-evolving landscape of natural language processing, large language models (LLMs) have reigned supreme, ushering in an era of unparalleled zero-shot and few-shot learning capabilities. However, their omnipotence comes at a cost – the monumental computational resources they demand. A single LLM wielding a staggering 175 billion parameters gobbles up a jaw-dropping 350GB of GPU memory, locking out many research teams, especially those reliant on low-latency performance.

Tackling this conundrum head-on, a dynamic collaboration between the University of Washington and Google has given birth to “Distilling Step-by-Step,” a pioneering breakthrough unveiled at ACL2023. This innovation reimagines the training process for small, task-specific machine learning models, offering a lifeline to those grappling with limited data and resources.

Distilling Step-by-Step’s magic lies in its ability to extract invaluable natural language rationales from LLMs. These rationales, akin to intermediate reasoning steps, serve as enhanced guidance during the training of smaller models. Leveraging these rationales alongside standard task labels paves the way for a symbiotic relationship that propels model performance to unprecedented heights.

Here’s a glimpse into the two-step dance of implementing Distilling Step-by-Step. First, the method employs CoT prompting to coax rationales from an LLM, equipping it to generate rationales for novel inputs. Then, these rationales become the secret sauce in training compact models via a multi-task learning framework. Task prefixes act as the North Star, steering the model’s course between label prediction and rationale generation.

In empirical trials, a formidable 540-billion-parameter LLM took center stage, flanked by T5 models tailored for task-specific endeavors. The results were nothing short of astonishing. Distilling Step-by-Step flexed its muscles, delivering stellar performance gains while wielding significantly reduced data requirements. For instance, on the challenging e-SNLI dataset, it outshone standard fine-tuning, achieving remarkable results with just 12.5% of the full dataset. This trend extended across various NLP tasks, from ANLI to CQA and SVAMP.

Even more impressive was Distilling Step-by-Step’s knack for producing superior results with considerably smaller model sizes compared to their few-shot CoT-prompted LLM counterparts. On the e-SNLI dataset, a modest 220-million-parameter T5 model outperformed a colossal 540-billion-parameter PaLM. In the ANLI arena, a 770-million-parameter T5 model triumphed over a 540-billion-parameter PaLM by a staggering factor of 700, underscoring the tantalizing efficiency gains that lie within reach.

Most notably, Distilling Step-by-Step showcased its prowess by outshining few-shot LLMs while wielding smaller models and a reduced data appetite. On the ANLI battlefield, a 770-million-parameter T5 model outperformed a 540-billion-parameter PaLM using just 80% of the full dataset – an achievement that eludes traditional fine-tuning.

Conclusion:

The introduction of “Distilling Step-by-Step” represents a major stride forward in natural language processing. This innovative approach not only overcomes the limitations imposed by resource-intensive LLMs but also enhances efficiency and accessibility. With smaller models capable of delivering superior results, this development holds the potential to reshape the market landscape, making advanced language models more attainable and practical for a wide range of applications.