TL;DR:

- PC-NeRF revolutionizes 3D scene reconstruction and view synthesis using sparse LiDAR data.

- It introduces hierarchical spatial partitioning, optimizing scene representations at various levels.

- By dividing the environment into parent and child segments, PC-NeRF efficiently utilizes sparse data.

- Experimental validation demonstrates exceptional accuracy in novel LiDAR view synthesis and 3D reconstruction.

- PC-NeRF outperforms traditional methods in handling increased sparsity in LiDAR data, particularly in large-scale outdoor environments.

Main AI News:

The pursuit of autonomous vehicles hinges on their ability to navigate intricate terrains with precision and dependability. At the forefront of this quest lies the technological domain of 3D scene reconstruction and innovative view synthesis, with sparse Light Detection and Ranging (LiDAR) data playing a crucial role. Yet, existing methodologies have grappled with bottlenecks, particularly in effectively harnessing the sparse and dynamic nature of outdoor environments. Addressing these challenges necessitates pioneering solutions capable of transcending current limitations.

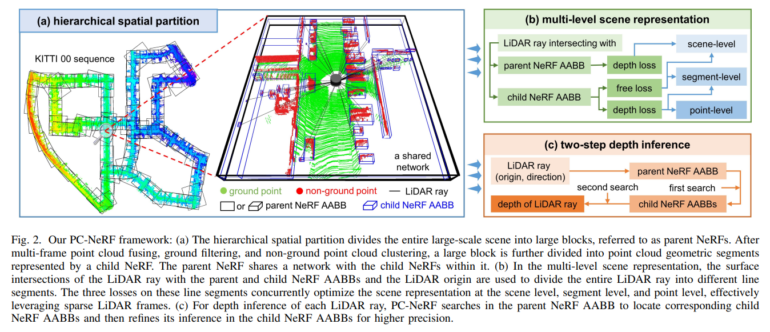

Introducing the Parent-Child Neural Radiance Fields (PC-NeRF), a revolutionary innovation by researchers at the Beijing Institute of Technology. PC-NeRF introduces a hierarchical spatial partitioning approach that redefines the paradigm of 3D scene reconstruction and novel view synthesis using sparse LiDAR frames. Through a systematic dissection of the environment into interconnected segments, ranging from overarching scenes to specific points, PC-NeRF showcases unparalleled efficiency in distilling and leveraging sparse LiDAR data.

At the heart of PC-NeRF’s methodology lies the division of the captured environment into parent and child segments, meticulously optimizing scene representations at various hierarchical levels. This approach enhances the model’s capacity to capture intricate details accurately, significantly enhancing the utilization of sparse data. By assigning distinct roles to parent and child NeRFs—where the former encapsulates larger environmental blocks and the latter focuses on detailed segmentations within these blocks—PC-NeRF adeptly navigates the complexities of outdoor settings that have long challenged conventional neural radiance fields.

PC-NeRF’s implementation commences with partitioning the environment into parent NeRFs, further subdivided into child NeRFs based on the spatial distribution of LiDAR points. This meticulous division facilitates detailed environmental representation, enabling the swift acquisition of volumetric scene data. Such a multi-level representation strategy is pivotal in enhancing the model’s interpretative capabilities and leveraging sparse LiDAR data effectively, distinguishing PC-NeRF from its predecessors.

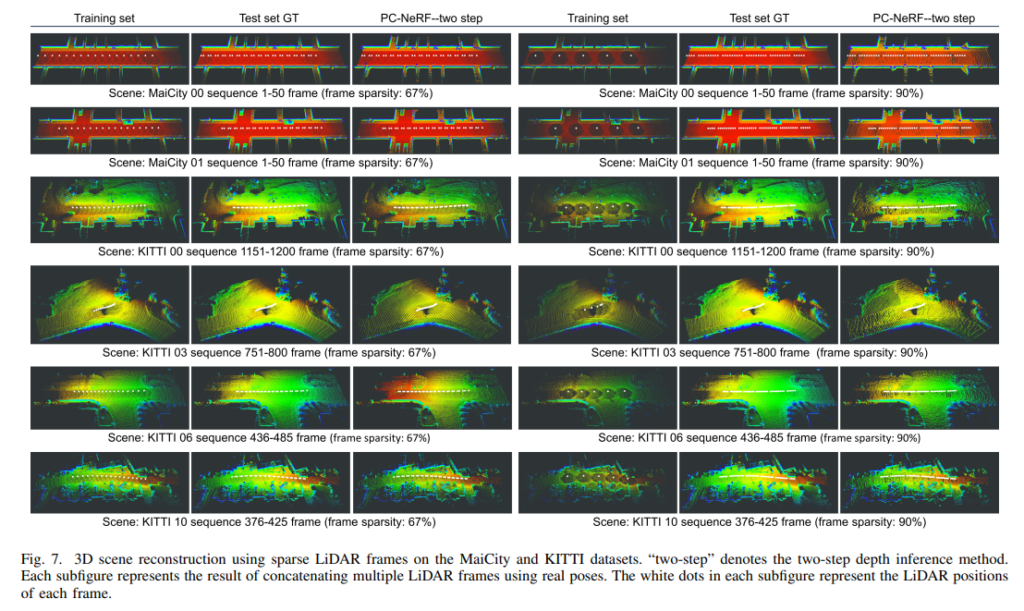

Extensive experimental validation underscores PC-NeRF’s exceptional accuracy in novel LiDAR view synthesis and 3D reconstruction across expansive scenes. Its ability to achieve high precision with minimal training epochs and navigate scenarios with sparse LiDAR frames marks a significant leap forward in the field. Notably, PC-NeRF’s deployment efficiency in autonomous driving underscores its potential to substantially enhance navigation systems’ safety and reliability.

PC-NeRF’s superiority shines through its robustness against heightened sparsity in LiDAR data, a common scenario in real-world applications. Particularly in large-scale outdoor environments, PC-NeRF excels in synthesizing novel views and reconstructing 3D models from limited LiDAR frames. These achievements underscore the framework’s potential to advance autonomous driving technologies and broaden the application horizons of neural radiance fields across diverse domains.

Source: Marktechpost Media Inc.

Conclusion:

PC-NeRF’s innovative approach to 3D scene reconstruction and view synthesis presents a significant advancement in the market, particularly for autonomous driving technologies. Its ability to efficiently utilize sparse LiDAR data and outperform traditional methods in handling real-world scenarios positions it as a promising solution for enhancing navigation systems’ safety and reliability in various industries. This innovation underscores the growing potential of neural radiance fields and their application across diverse domains.